Measurement Traceability: The Engineered Backbone of Reliable Metrology

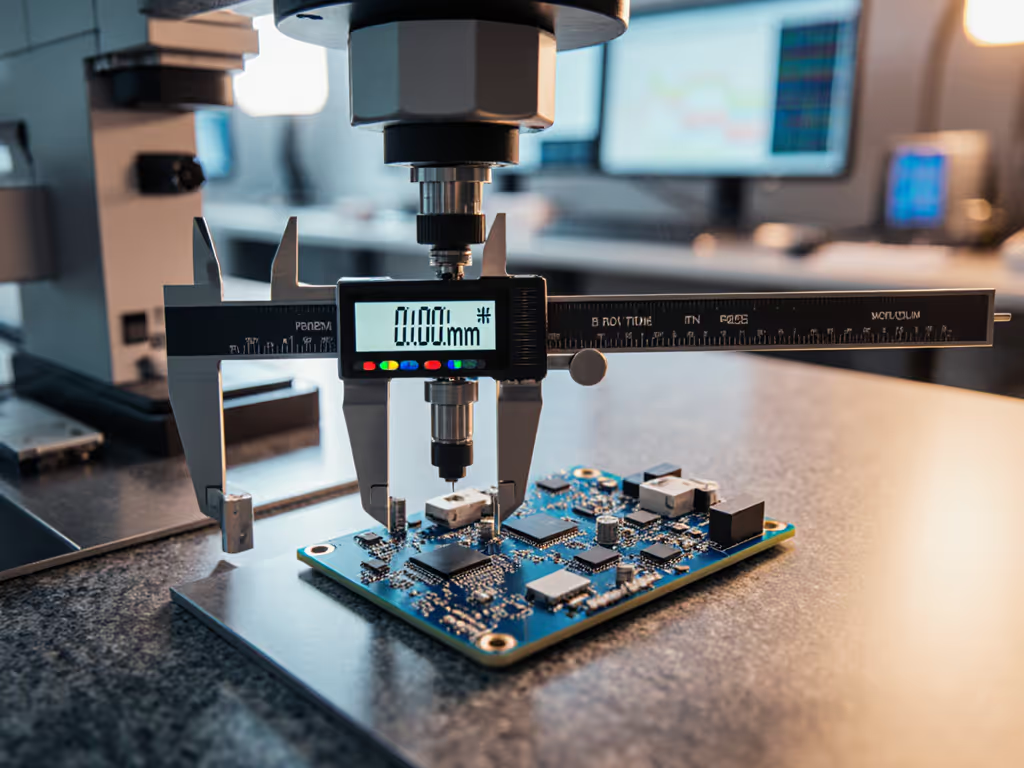

Measurement traceability and metrology traceability aren't just compliance checkboxes (they're the engineered backbone that ensures your Wednesday morning micrometer reading matches the CMM report from last Friday, even when unexpected temperature swings threaten dimensional stability). As a metrology engineer who validates test rigs and correlates lab-to-shop data daily, I've watched teams treat traceability as paperwork rather than a complete system. Let me be clear: measurement capability is engineered across tool, process, and environment (not purchased off a shelf). This FAQ deep dive cuts through the certification noise to show how traceability actually functions in real manufacturing environments.

What Exactly Is Measurement Traceability?

Metrological traceability isn't just "calibration" (it's the documented property where a measurement result connects to a reference through an unbroken chain of calibrations, each step contributing to the overall uncertainty budget). The BIPM VIM definition (2.41) makes this explicit: "property of a measurement result whereby the result can be related to a reference through a documented unbroken chain of calibrations, each contributing to the measurement uncertainty."

This means your micrometer reading must ultimately connect to SI units through documented steps (not just "NIST-traceable" claims on a certificate). Each calibration transfer adds uncertainty, creating a pyramid where your shop-floor measurement inherits uncertainty from every link in the chain. For a step-by-step method to build and audit your uncertainty budget. When reviewing calibration certificates, I always check that units and conditions specified match actual usage (temperature compensation applied at 20°C won't help when your shop hits 28°C at noon).

Shop by tolerance stack, environment, and workflow, or accept drift.

Why Do Calibration Chains Break Down in Manufacturing?

Most failures happen when teams ignore explicit tolerances in the calibration chain. Consider a common scenario: your quality lab uses gage blocks certified to ISO 3650, but your shop-floor calipers skip intermediate standards and claim "direct NIST traceability." This creates dangerous uncertainty gaps. To prevent these, review the measurement error types that commonly inflate metrology risk.

A proper calibration chain should look like:

- SI definition (BIPM)

- Primary standards (NIST, PTB, NPL)

- National standards (accredited labs)

- Working standards (your lab's master gages)

- Field instruments (shop-floor calipers, micrometers)

During a recent supplier audit, I found a shop using digital calipers with undocumented calibration intervals. Their "traceable" claim crumbled when I asked about the uncertainty budgets between their gage blocks and production measurements. Remember: traceability without documented uncertainty propagation isn't traceability (it's guesswork with paperwork).

iGaging Absolute Origin 0-6" Digital Caliper

How Do National Standards Actually Impact My Daily Measurements?

National metrology institutes (NIST, NPL, PTB) maintain the physical realizations of SI units, but their direct impact on your shop is often misunderstood. National standards matter because they anchor the uncertainty pyramid (your measurements inherit stability from this foundation). However, the practical impact comes through accredited labs that translate these standards to industry-relevant artifacts.

When validating a new CMM, I always review the calibration certificate's full chain (not just the final number). A certificate showing "uncertainty: 0.0001" without context is useless. If you’re unsure whether your data are precise, accurate, or both, start with our accuracy vs precision primer. I need to see:

- Reference standards used

- Environmental conditions during calibration

- Mathematical model of uncertainty propagation

- Dates of each calibration transfer

Without these, that beautiful 4.6-star digital caliper's readings might not be traceable to a standard under your actual shop conditions. One aerospace supplier learned this the hard way when their "traceable" bore gages failed GR&R after ignoring thermal expansion coefficients in their uncertainty budget.

What's the Relationship Between ISO 17025 Compliance and Real Traceability?

ISO/IEC 17025 compliance provides the framework, but true traceability requires engineering judgment. For a small-lab roadmap, see our ISO/IEC 17025 accreditation guide. The standard mandates documented calibration chains and uncertainty evaluations, but it doesn't specify how to apply them to your unique tolerance stack. I've seen shops pass audits yet still produce bad parts because they followed ISO 17025 paperwork without understanding their measurement risk.

Key gaps in ISO 17025 compliance I routinely encounter:

- Certificates showing "as found" data but missing uncertainty contributions from environmental factors

- Calibration intervals based on calendar time rather than usage conditions

- No correlation between lab measurements and shop-floor reality

- Uncertainty budgets that ignore operator influence

After our heat wave incident (where unchecked thermal drift scrapped a batch), I implemented a simple rule: assumptions stated must accompany every measurement. If your calibration lab doesn't specify temperature stability during measurement, their uncertainty claim doesn't apply to your shop.

How Should I Design a Traceability System for My Specific Tolerance Stack?

Start with your tightest tolerance (if it's ±0.025 mm, your measurement system needs TUR (test uncertainty ratio) of at least 4:1). To translate tolerances into inspectable features, use our GD&T measurement guide. This means your total uncertainty budget (calibration chain + environment + operator) must be ≤ ±0.00625 mm.

My proven approach:

- Map your tolerance stack to required measurement uncertainty

- Calculate environmental error bars (e.g., 11.5 ppm/°C for steel)

- Document all calibration chain links with uncertainty contributions

- Validate with correlation studies between lab and shop instruments

Case study: A medical device manufacturer reduced scrap by 37% after implementing this for their catheter tubing measurements. They stopped focusing on "NIST traceable" labels and engineered a system where every measurement included error bars reflecting actual shop conditions. Their quality manager told me: "We finally trust our first-pass yield numbers."

What Practical Steps Ensure True Measurement Traceability?

- Demand full uncertainty budgets (not just "compliant" statements)

- Validate calibration intervals based on usage (not just annual cycles)

- Correlate lab-to-shop measurements under representative conditions

- Document environmental controls with hourly logs during critical runs

- Train technicians on uncertainty contributors (not just how to read displays)

Remember that heat wave story? Our surface plate drifted 0.05 mm at 28°C. The fix wasn't just better AC (it was implementing thermal monitoring that fed directly into our uncertainty calculations). Now when temperatures rise, our operators adjust acceptance limits based on documented thermal coefficients, not guesswork.

Measurement traceability isn't about certificates, it's about engineered confidence. When your calibration chain respects explicit tolerances and environmental reality, your data becomes trustworthy enough to bet production on. That's when you'll see the real ROI: fewer holds, faster audits, and parts that actually fit.

For deeper implementation guidance, I recommend the NIST Technical Note 1297 on uncertainty evaluation (it's the most practical framework I've used for building defensible measurement systems). Next time you're selecting equipment, ask how it supports your uncertainty budget, not just what resolution it boasts.