Accuracy vs Precision: Stop Measurement Mistakes

Ever sent a good part to scrap because your team couldn't tell the difference between accuracy vs precision? That's not a tool failure, it's a workflow failure. When operators conflate these terms, you get expensive measurement errors explained by human factors, not instrument specs. Let's cut the confusion with shop-floor clarity.

I've seen too many shops pour money into high-resolution tools while missing basic repeatability, like the team that trusted "precise" calipers until we discovered inconsistent thumb pressure skewed readings. Their parts weren't drifting; the measurement method was. That's why I design metrology into workflows, not as an afterthought. If operators can't repeat it, it doesn't measure.

Why Accuracy vs Precision Confusion Costs You Money

The Real-World Consequence of Mislabeling

Accuracy means hitting the true value (like a dart near the bullseye). Precision means consistency (darts clustered together, even if off-target). But on the shop floor, we see:

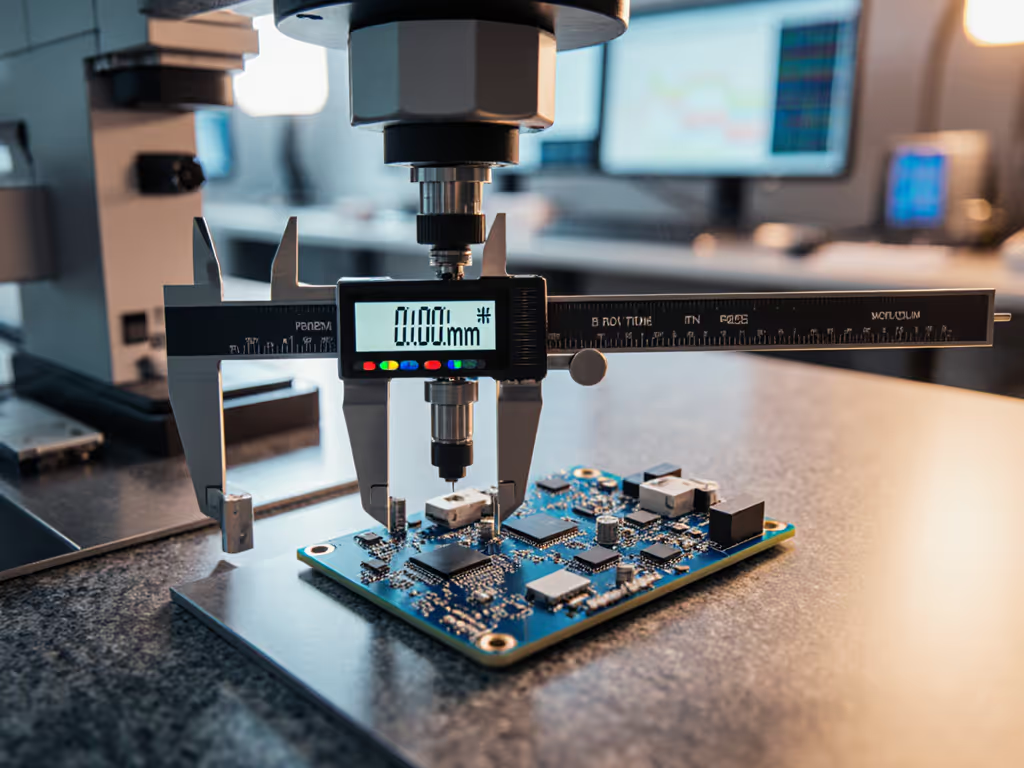

- "Precise but inaccurate" tools causing false scrap. Example: A digital caliper showing identical 0.0005" readings that are all 0.002" high due to calibration drift. Operators assume consistency = correctness.

- "Accurate but imprecise" methods wasting time. Example: A well-calibrated micrometer giving inconsistent results because operators use varying spindle force.

This isn't academic. Last quarter, a medical device supplier I advised scrapped 37 valve housings because their GR&R study used "precision" tools without checking repeatability in tools under actual conditions. The calipers met spec on the bench, but not with oily gloves and 24/7 shifts. Tolerance levels weren't the issue; human-tool interaction was.

Remember: A tool can be precise without being accurate, but without accuracy, precision is just expensive noise.

The Hidden Cost of Uncertainty

When measurement uncertainty isn't mapped to your tolerance stack, you pay three ways:

- False acceptance: Parts that pass measurement but fail function (e.g., 98% of a tolerance band with 5% uncertainty).

- False rejection: Good parts sent to rework/scrap (costing $200–$500 per hour in aerospace).

- Audit risk: ISO 9001/AS9100 findings for uncontrolled calibration importance (e.g., tools recalibrated only when broken).

Worse? Many teams chase resolution (e.g., 0.0001" displays) while ignoring repeatability in tools. A $500 digital micrometer with poor ergonomics may underperform a $150 analog version operated correctly. That's why I insist on repeatability over theatrics.

The Operator-First Fix: Stop Guessing, Start Doing

Step 1: Separate the Terms in Daily Language

Ditch the dartboard analogy. Use operator checklists with visual anchors:

| Term | Shop-Floor Meaning | Teach-Back Cue |

|---|---|---|

| Accuracy | "Hitting the true value" | "Is it right?" (e.g., calibrated against gage blocks) |

| Precision | "Hitting the same spot" | "Is it repeatable?" (e.g., same operator, same part, 10x) |

Pro Tip: Run a 2-minute "true value" test tomorrow. Have operators measure a certified gage pin 5x without resetting zero. If readings vary >10% of your tolerance, repeatability in tools is failing, not the tool's spec sheet.

Step 2: Match Tools to Tolerance Levels (Not Brochure Claims)

Stop overbuying. Use this rule:

Required tool capability = Part tolerance ÷ 4

Example: For a ±0.005" tolerance, your tool must resolve ≤0.00125" (0.0025" total error).

| Part Tolerance | Minimum Tool Capability | Common Pitfall |

|---|---|---|

| ±0.010" | 0.0025" resolution | Using rulers for tight fits |

| ±0.005" | 0.00125" resolution | Assuming digital = better |

| ±0.001" | 0.00025" resolution | Ignoring thermal effects |

Critical: Test tools in your environment. I once saw a shop replace $2k optical comparators because coolant mist fogged lenses (solved with a $40 Starrett Steel Rule for rough checks).

Starrett C604RE-6 Steel Rule

Step 3: Design Measurement Into Workflow - Not After

Repeatability lives where humans touch tools. Build these habits:

- Force control: Use thumb wheels or preset stops (e.g., on Starrett calipers) to eliminate finger pressure variables. One auto shop cut GR&R by 22% just adding a $2 friction sleeve.

- Calibration rhythm: Tie recalibration to usage cycles, not calendar dates. Example: "Recalibrate micrometers every 500 parts, not annually."

- Ergo-checks: Before shift start, operators test tools with gloves on. If they can't zero a caliper comfortably, the tool fails.

Your Action Plan: Stop Measurement Errors Tomorrow

- Run the 5-Minute GR&R Drill: Pick one critical dimension. Have 3 operators measure the same part 5x each. Calculate:

(Max Reading - Min Reading) ÷ Tolerance Band × 100 = GR&R %

If >15%, repeatability in tools is broken. Fix workflow before buying new tools.

- Post This Visual Anchor: Put a laminated card at every station:

"Accuracy: Is it RIGHT? (Check against gage blocks) Precision: Is it REPEATABLE? (Same operator, same part, 5x)"

- Audit Calibration Logs Weekly: Flag tools used >500 cycles since last calibration. Recalibrate before they drift.

Measurement error explained? It's not the tool's fault, it's the system. Last week, a job shop slashed scrap by 31% by having operators teach-back the force needed on calipers. The part didn't change; the handling did. That's repeatability over theatrics.

Your move: Walk the floor tomorrow. Find one station where "precision" tools are failing accuracy. Implement the 5-minute drill. I'll wager you find human touch, not specs, is the fix. Because if operators can't repeat it, it doesn't measure.