AI System Metrology: Quantify Performance Uncertainty

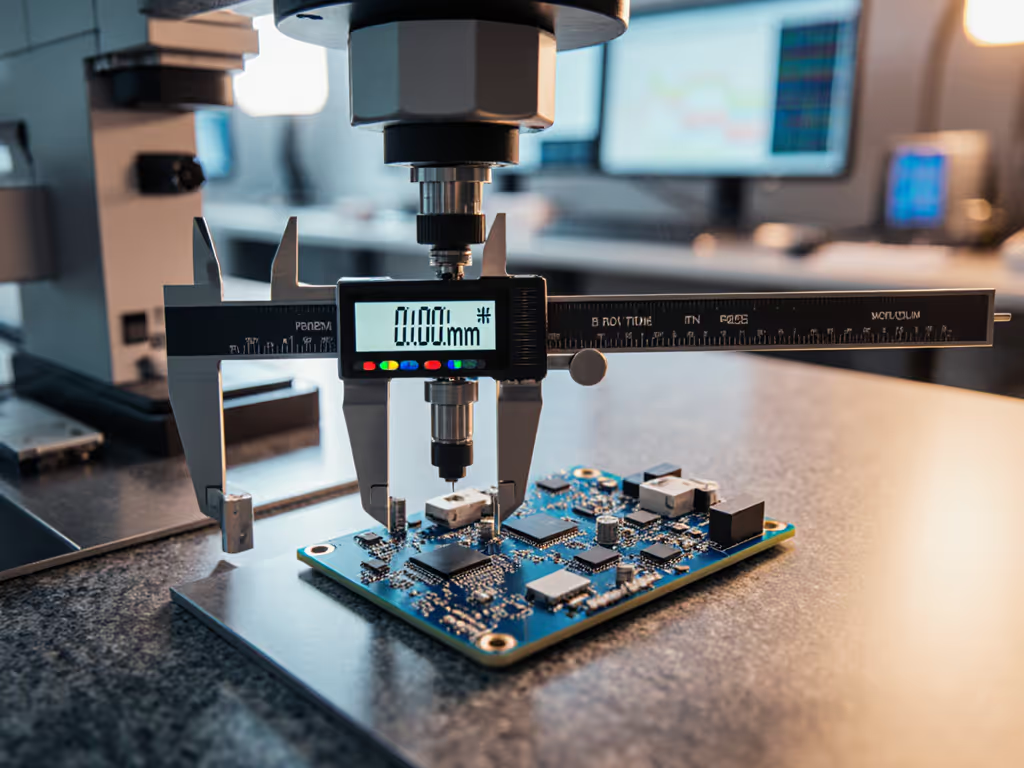

When your CNC machine vision system flags a turbine blade as out-of-tolerance, can you prove it wasn't the AI making the error? That's where AI system metrology becomes your audit lifeline. As manufacturers integrate more AI into inspection and process control, we're seeing the same fundamental metrology principles that govern your micrometers and CMMs now applying to algorithms. Measurement for artificial intelligence isn't theoretical, it is the difference between a clean audit finding and a costly production stoppage when your model's uncertainty exceeds part tolerances. I've seen audits stall because teams treated AI like software rather than a calibrated measurement instrument. Trace it, budget it, then trust it under audit. For fundamentals, see our primer on measurement traceability.

This is especially critical as you deploy AI in precision manufacturing where sub-millimeter decisions determine part acceptability. Let's address your most pressing questions about building metrology-grade AI systems.

What does "AI system metrology" actually mean for my shop floor?

Think of your AI model as another piece of calibrated equipment, like the laser tracker you validate before critical alignments. AI system metrology applies the same traceability chains and uncertainty budgets we use for physical instruments to algorithmic decision-makers. When your vision system measures a medical implant's surface finish, that output has:

- A measurement uncertainty (how much it might deviate from truth)

- Environmental dependencies (lighting, temperature effects on camera sensors)

- Calibration requirements (regular validation against physical standards)

Just as you'd document your caliper's traceability to NMI standards, your AI system needs documented traceability to reference datasets with known uncertainty. Apply metrology rigor to datasets with our guide to AI training data validation. That time an auditor asked for the thermometer calibration behind our CMM room logs? Same principle applies to the datasets training your model, and without that chain, your AI's "measurements" carry no metrological validity.

How do I quantify performance uncertainty for an AI-based inspection system?

Start by building an uncertainty budget like you would for any measurement system, but include AI-specific contributors:

- Data uncertainty: Variability in training/validation data (e.g., ±0.05mm in reference measurements)

- Algorithmic uncertainty: Model variance across different inputs (Monte Carlo simulations help here)

- Environmental uncertainty: Temperature/humidity effects on both hardware and model performance

- Operational uncertainty: How operator actions (e.g., part placement) affect results

Your total uncertainty statement should look familiar: "0.05mm ± 0.012mm (k=2) at 20°C ±2°C, based on ISO 14253-1 with additional AI uncertainty contributors per NIST IR 8402."

Remember: Uncertainty bites at edges when production runs push tolerance limits. Your AI model might perform well at nominal conditions but fail catastrophically when coolant mist affects camera input.

What frameworks exist for measuring bias in AI systems used for quality control?

Bias measurement frameworks are your antidote to "black box" anxiety. Implement these three audit-ready practices:

- Stratified error analysis: Break down model errors by part geometry, material batch, or operator shift to identify systematic biases

- Counterfactual testing: "What if" scenarios where you deliberately introduce controlled deviations to verify expected model responses

- Adversarial validation: Use techniques from cybersecurity to test model robustness against edge cases (e.g., unusual lighting conditions)

For medical device inspection, this means documenting how your model performs across different implant surface finishes, not just average accuracy. I recently helped a client document bias against highly polished surfaces; that transparency saved them during an FDA audit when the model initially missed micro-scratches on reflective surfaces.

How can I detect model drift without disrupting production?

Model drift detection requires the same vigilance as monitoring gauge repeatability. For a broader view of how algorithms and measurement systems work together on the shop floor, read AI in metrology. Set up these quiet guardians:

- Statistical process control for AI: Track model confidence scores and prediction distributions using Shewhart control charts

- Shadow mode validation: Run the new model parallel to current systems without affecting production decisions

- Input domain monitoring: Flag when real-world data falls outside training distribution (e.g., new material batches)

One aerospace supplier I worked with implemented monthly "model gage R&R" (comparing AI outputs against master artifacts across their tolerance band). When they saw 15% increased variation at the high-end tolerance limits, they caught drift before it caused scrap. This isn't just smart engineering, it is what auditors look for when reviewing your AI management system.

What validation metrics actually matter for reliability under audit?

Forget "overall accuracy." For reliability validation metrics, focus on what affects your production outcomes:

| Metric | Manufacturing Relevance | Audit Documentation |

|---|---|---|

| Precision at tolerance limit | Predicts false accepts/rejects at critical dimensions | Required under ISO/IEC 17025 for decision rules |

| Recall for critical defects | Ensures safety-critical flaws aren't missed | Mandatory for AS9100 aerospace compliance |

| Model stability index | Tracks gradual performance degradation | Evidence of proactive monitoring for ISO 9001 |

| Environmental sensitivity | Documents operational boundaries | Critical for IATF 16949 process control |

Auditors don't care about your training F1 score, they'll ask how your system performs at the edge of your tolerance specification under actual shop conditions. Document your uncertainty budget at those critical points, with evidence of regular validation against physical standards. To align decision rules and documentation with audit expectations, follow our ISO/IEC 17025 accreditation guide.

Why this matters for your next audit

Your AI system isn't "software" to your auditor. It is a measurement instrument. When you treat it as such, with documented traceability chains and uncertainty budgets, you transform anxiety into confidence. That moment when I produced the thermometer calibration chain for our CMM environment? The same principle applies to your AI: show the complete measurement infrastructure from physical artifact to model output.

The best teams I work with document AI systems like they do their master gages: with clear calibration intervals, environmental constraints, and uncertainty statements traceable to recognized standards. They don't wait for audits to prove their systems; they build the evidence into daily operations. Uncertainty bites at edges, but with proper metrology, those edges become your strongest audit defense.

Further Exploration

Ready to apply metrology rigor to your AI deployments? Start with these actionable steps:

- Map your AI decision points to critical product characteristics (use your FMEA)

- Build an uncertainty budget template specific to algorithmic measurements

- Implement monthly model validation against physical reference standards

- Document environmental constraints for each AI system (like you do for CMM rooms)

For deeper implementation guidance, check NIST's Industrial AI Management and Metrology (IAIMM) project resources (they are developing the exact traceability frameworks manufacturers need). Your auditor will thank you when they see that same level of rigor applied to your AI systems as to your calipers and gages.