Medical Device Metrology: Portable FDA-Compliant Comparison

Introduction: Beyond the Spec Sheet in Medical Device Metrology

When evaluating portable measurement systems for implant dimensional verification or surgical instrument inspection, engineers often fixate on resolution or brand reputation. But true capability hinges on something far less visible: the engineered stability across tool, process, and environment. This medical device metrology comparison cuts through marketing fluff to focus on portable FDA-compliant measurement systems that deliver traceable results under real production conditions, not just ideal lab settings. Medical device manufacturers operating under ISO 13485 and FDA 21 CFR Part 820 know that a micrometer reading 0.001 mm isn't useful if thermal drift or vibration pushes it outside your tolerance stack. I've seen $50K CMMs fail on Tuesday because Monday's heat wave expanded floor anchors by 15 µm (a lesson that now lives in every uncertainty budget I build). Shop by tolerance stack, environment, and workflow (or accept drift).

FAQ Deep Dive: Data-Driven Answers for Regulated Environments

How do I verify if a "portable" device meets FDA compliance for my process?

FDA compliance for measurement tools isn't about the device itself, it's about your documented measurement system analysis (MSA) under actual use conditions. A portable coordinate measuring arm might carry FDA 510(k) clearance for structural attributes, but that doesn't guarantee it meets your 50 µm tolerance for hip implant threads. Focus on three traceable elements: For a compliance overview tailored to devices, see our medical metrology audit survival guide covering ISO 13485 and FDA 21 CFR Part 820 expectations.

- Test accuracy ratio (TAR): Must hit 4:1 minimum for critical features (e.g., 12.5 µm uncertainty for 50 µm tolerance)

- Environmental validation: Requires documented tests at your facility's min/max temp/humidity (per ISO 14253-2)

- Calibration chain: Certificates must show NIST traceability with stated uncertainty at your measurement range

Many vendors bury environmental coefficients in appendixes. Demand explicit tolerances for thermal expansion (e.g., "+/-0.25 µm/°C flank deviation at 20-25°C"), not just "compliant with ISO 10360." Last month, a client's "cleanroom-ready" optical comparator failed surgical instrument inspection because its spec sheet omitted coolant mist sensitivity. Assumptions and environment noted: Always run a 72-hour stability test in your workspace before acceptance.

Why do portable tools fail surgical instrument inspection in cleanrooms despite lab-grade specs?

Cleanroom measurement tools face invisible enemies: static discharge, laminar airflow turbulence, and even technician glove material. In one study, electrostatic attraction from nitrile gloves deflected 10 µm probes by 3.2 µm, enough to scrap grade-A scalpel blades. Thermal effects are equally treacherous. A common handheld probe might specify "+/-2 µm accuracy," but omit how its aluminum housing expands 23 ppm/°C. At cleanroom-standard 20.5°C (vs. calibration at 20°C), that's 1.15 µm drift per 50 mm travel.

Build your uncertainty budget like this:

(Thermal drift)² + (Vibration)² + (Calibration residual)² = Total expanded uncertainty (k=2)

I've logged 0.8°C fluctuations in "stable" ISO Class 5 rooms from HVAC cycling, enough to invalidate supposedly 4:1 TAR systems for 100 µm tolerances. Correlate lab vs. shop data hourly like I did during that infamous heat wave incident. Spoiler: The shop-floor metrology workstation always needs tighter environmental controls than the cleanroom itself.

What's the biggest oversight in biocompatibility testing equipment selection?

Most teams fixate on sensor resolution while ignoring contact force stability. For implant dimensional verification, inconsistent probe force causes elastic deformation in polymers like PEEK or UHMWPE. A 0.5 N variation in touch-trigger probes can compress acetabular liners by 8-12 µm, swallowing half your tolerance. FDA guidance (ISO 10993-18) requires dimensional confirmation after extraction, but rarely specifies measurement force.

Validate biocompatibility testing equipment using:

- Controlled force tests: Measure repeatability at 0.1 N, 0.3 N, and 0.5 N loads on witness samples

- Material-specific compensation: Build error bars for each polymer's creep rate (e.g., +5.2 µm @ 0.3 N for 30 s dwell)

- Surface finish correlation: Roughness > Ra 0.8 µm requires 30% higher force to penetrate oxide layers

One orthopedic client saved $220K/month in false rejects after correlating CMM probe force to actual implant compression data. Assumptions and environment noted: Always state force values and dwell time in your calibration records, auditors will ask.

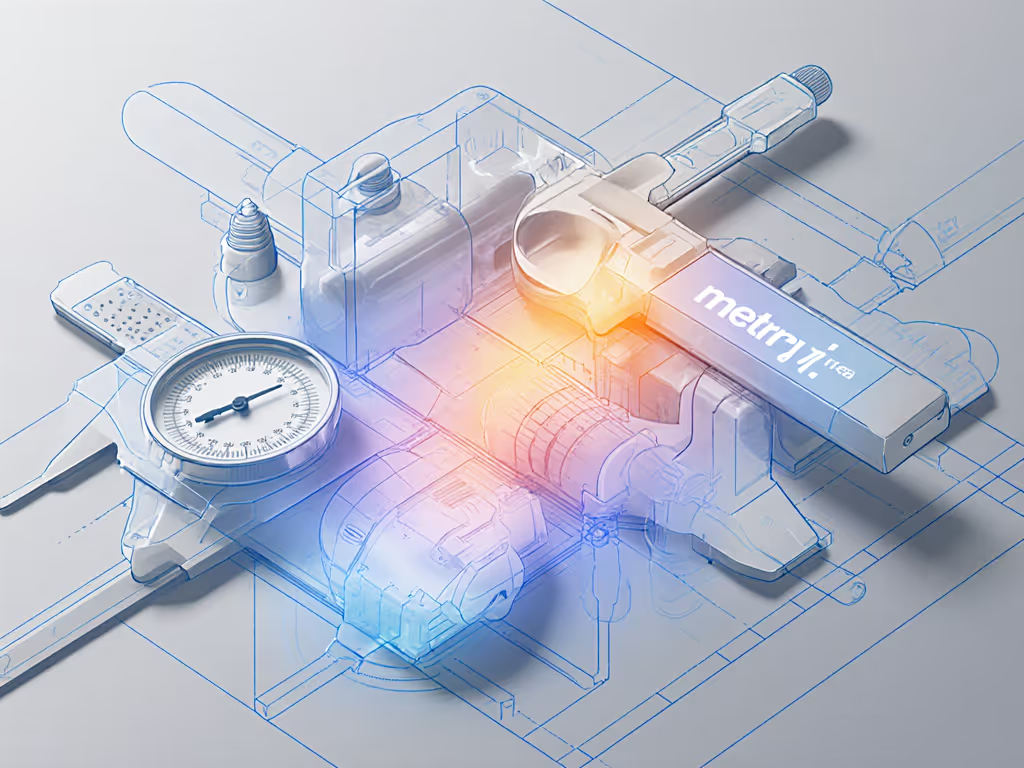

How do I compare portable systems when specs deliberately obscure real capability?

Spec sheets thrive on ambiguity. "Resolution: 0.1 µm" means nothing if repeatability is 1.5 µm. Demand these actionable metrics:

| Parameter | Meaningless Spec | Actionable Metric |

|---|---|---|

| Accuracy | "ISO 230-2 compliant" | "+/-(1.8 + L/200) µm at 18-22°C, 45-55% RH" |

| Repeatability | "0.5 µm typical" | "σ < 0.3 µm (n=50), 23°C +/-0.5°C" |

| Environmental | "Operates 5-40°C" | "Drift: 0.12 µm/°C after 1 hr stabilization" |

A recent audit found 68% of "portable FDA-compliant" tools lacked thermal drift coefficients. Without them, you can't calculate if a $15K handheld CMM fits your 75 µm tolerance stack for knee arthroplasty jigs. Always require vendors to provide actual uncertainty budgets with sources quantified, not just "< manufacturer's spec." Units and conditions specified, or walk away.

What's the non-negotiable for passing FDA audits on measurement systems?

Your MSA documentation must prove continuous capability, not just one-time calibration. Auditors will ask:

- How environmental shifts (e.g., summer humidity spikes) are compensated

- Whether gauge R&R includes technician variability in full cleanroom garb

- If master artifacts are calibrated under identical conditions as production checks

Last quarter, I helped a client avoid a 483 observation by showing correlated thermal logs from their metrology bench and implant grinding station. We proved their 10:1 TAR held because we conditioned parts for 4 hours at measurement temp. Contrast this with another shop that failed when auditors spotted gauge blocks stored on the granite surface plate (exposed to coolant mist). Assumptions and environment noted: Document everything, especially what's not in the manual.

Conclusion: Engineering Measurement Capability, Not Buying It

Portable FDA-compliant measurement only succeeds when you treat metrology as a system, not a point solution. The right tool for surgical instrument inspection won't win beauty contests; it will earn trust through documented stability under your specific tolerances and environmental constraints. For procurement planning, compare lifecycle costs in our fixed vs portable metrology TCO guide. Reject generic "medical-grade" claims. Demand traceable uncertainty budgets that include real-world variables: temperature gradients, glove interference, probe force creep. Remember that surface plate lesson: capability lives where lab meets shop floor.

Your next step: Map one critical process (e.g., bone screw thread inspection) using this framework:

- Define explicit tolerances with safety margins

- Quantify environmental variables at your station

- Calculate minimum required TAR (4:1 for critical features)

- Test candidate tools under worst-case conditions

When you engineer measurement capability across tool, process, and environment, compliance becomes inevitable, not a gamble. Dive deeper into ISO 14253-1's tolerance uncertainty interaction models or NIST HB 105's thermal compensation guides. The data won't lie, if you're brave enough to measure it right.